| Table of Contents |

|---|

Introduction

...

- When doing a large import, first test with a small subset of 10-100 rows. Many times the same mistake is committed in every row. That avoids waiting for a long time to get the results back with the same error in every row.

- For huge uploads, like 500K to 1+M, refer to "Tips and tricks to import large number of records" below.

...

Can I import any CSV file?

The data in the CSV file should be in specific template formats. The templates can be downloaded from the application under the import option for every data.

Can I import

...

participants, visits, specimens, etc. in one go?

Yes, using the 'Master Specimens' template you can import participants, visits, and specimens in one go. For more details on import refer 'Master Template'

...

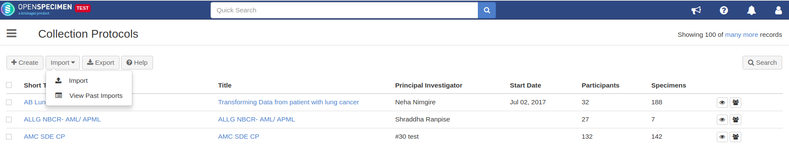

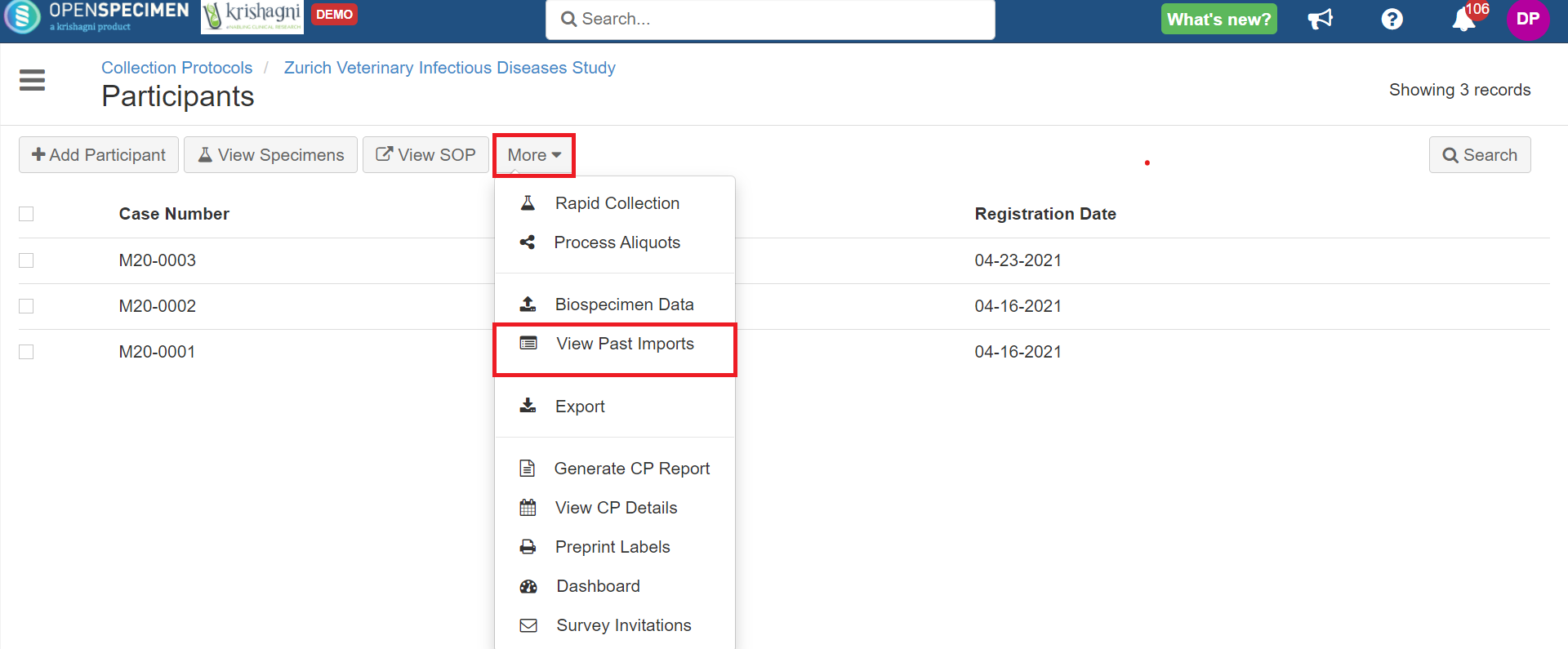

Once the CSV file is imported, there is a report generated under 'View Past Imports' in the import feature. The dashboard will show the status of every data import.

| Expand |

|---|

From the list view page, click on the 'Import' button → View Past Imports If you are using bulk import with a CP, you can click on 'More' → View Past Imports |

How will I know if there are errors?

...

If you want to abort the bulk import job, click on the 'Abort' icon on the jobs page for the specific import job:

...

Email notifications are sent after the bulk import job is completed, failed, or aborted to the user who performed the bulk import. The email is also CCed to 'Administrator Email Address' set under Settings → Email.

...

Go to the home page, click on the ‘Settings’ card.

Click on the ‘Common’ module and select property ‘Pre-validate Records Limit’

Set ‘0’ for the ‘New Value’ field and click on ‘Update’

| Info |

|---|

Note: When validation is disabled, the system will show errors for failed records but will upload the success records. |

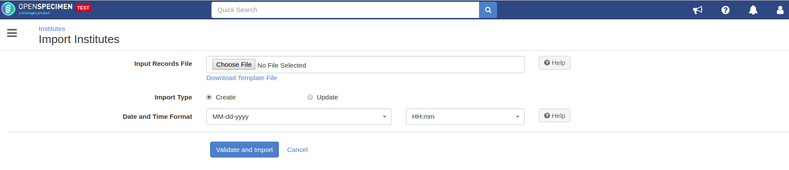

What is 'Validate and Import'?

(v3.4 onwards)In bulk upload, if 100 records are uploaded out of which 60 failed and only 40 records processed successfully, the user has to filter out the failed records, rectify and upload them again for reprocessing. The 'Validate and Import' feature validates the complete file before upload.

- If any record fails in inputting the CSV file, the whole job will fail and nothing will be saved in the database until all the records get succeeded.

- If there is any error then the system returns the status log file with the proper error message for incorrect records so that user is able to rectify the incorrect records and upload again.

- The time required to validate the records is the same as that required to upload the records.

- The maximum number of records that can be validated in one job is set to 10000 by default. It can be changed from Settings → Common → Pre-validate Records Limit.

- If the records are more than 10k, the system shows a message 'Number of records to import are greater than 10000, do you want to proceed without validating input file?'.

- If user proceeds you proceed without validation, then the records are processed individually.

Can the Super Admin view import jobs of all users?

Yes, "Super Administrator" can view the import jobs of all the users. Please note that the user can bulk upload the data from two places.

- Outside individual CP: i.e. from the Collection protocol list page (Collection Protocols → More → Import)

- For specific collection protocol (Collection Protocols → Participant List → More → Import)

The jobs will be visible to the Super Admin based on how the user uploaded the file. In other words, jobs uploaded at the global level won't be visible under specific CP and vice-versa.

Can the institute admin view import jobs of other users?

...

Yes, same as Super Admin. Institute Admin can view import of users from his/her institute.

Tips and tricks to import a large number of records

If you have a very large number of data to import (say in 100s of K or millions), you can follow the below steps to improve the speed of data import:

- Do imports via folder import and not via UI. Refer to Auto bulk import for this.

- Break the large file into smaller files. Say 100K specimens each. The problem with one large file is that it will take forever for the system to even read the file (i.e. before starting to even process the first row).

- If importing via UI, import the file as Super Admin user. This will tell the system to not spend time doing privilege checks. This will automatically happen if you do the auto-bulk import by dropping the file in the server folder.

- Schedule the import during off-peak hours e.g. daily 5 PM to 8 AM next day or weekend. You can do this by putting fixed number of files in the folder i.e. once you know 1 file of 100K takes 1 hour, then you can put say 14 files.